Jairo Pava is a Senior Software Engineering manager at Panorama Education. He supports the New Engineering Foundations squad, which explores emerging use cases in Education Technology with Generative AI.

Artificial intelligence holds incredible promise for transforming education, and educators are starting to embrace its potential. In fact, 77% of educators believe AI is useful. However, concerns about vulnerabilities—such as misuse, data privacy and bias—highlight the importance of addressing security risks as districts explore how to integrate AI responsibly.

By focusing on proactive solutions to these concerns, schools can unlock the full benefits of AI while safeguarding students, staff, and systems. In this post, we’ll dive into the latest research and share best practices to help districts navigate AI adoption with confidence and security.

What Are the Top AI Security Concerns in Education?

AI tools have the potential to streamline administrative tasks and provide actionable recommendations to meet a variety of school needs. These innovations can free up valuable time and resources for educators and administrators.

However, with these opportunities come important considerations. To ensure the safe and responsible use of AI in education, it’s crucial to address the potential security risks associated with its implementation. Here are some of the most pressing concerns to keep in mind:

-

Cybersecurity Vulnerabilities: AI models can be tricked by carefully crafted malicious inputs (called “injection” or "adversarial examples") that lead to incorrect or harmful decisions.

-

Data Privacy Risks: Large Language Models (LLMs) are developed by storing and processing large amounts of data, which could include user-provided information. Privacy violations can occur when sensitive personal information, such as student academic records, is used to train AI models or when responses containing sensitive data are mishandled.

-

False Information & Algorithmic Bias: AI systems can make mistakes. Accuracy of these systems depends on the quality and diversity of the data they’re trained on. When input data is skewed by biases or missing context, AI outputs will mirror those inaccuracies and perpetuate the same biases.

-

Statistical Limitations: Despite training, modern machine learning models still struggle with problems that require a deep understanding of complex situations. For instance, AI might excel at analyzing test scores to predict academic performance, but would not know how to factor in issues like a student’s emotional well-being or unique learning challenges.

-

Transparency Challenges: AI models operate as black boxes, meaning their decision-making processes are not easily understood, even by experts. This lack of clarity can make it difficult for administrators to trust its outputs.

-

Creating a Balanced Approach: While AI can streamline processes and provide valuable insights, critical decisions require human judgment. Instead of replacing human expertise, AI should be used to complement it, ensuring a balanced approach that combines the strengths of both.

Training Educators on AI Awareness

Although many teachers are eager to learn about AI, a significant number report not receiving formal training from their schools or districts. In fact, a 2024 survey by the EdWeek Research Center found that 58% of teachers had not received any training on AI, despite its growing presence in education.

To ensure secure and effective AI integration, district leaders must prioritize equipping teachers and administrators with the necessary knowledge and skills to navigate these technologies responsibly.

Here are some strategies to empower educators with practical skills they need to use AI securely and effectively:

-

Comprehensive Professional Development: Offer structured programs exploring AI capabilities and limitations. This could include webinars, Q&A events, and online modules with interactive content covering AI applications in education and secure data handling processes.

-

Ethical Use Workshops: Deliver interactive workshop sessions on responsible AI integration. These workshops can explain how to prevent bias in AI, maintain fairness in student outcomes, and use AI to design inclusive learning experiences.

-

Hands-on Experience: Provide practical training using real-world AI education tools. Set aside time for teachers to experiment with the applications and practice completing AI-supported tasks. This can help them build confidence and familiarity with how the tools work.

-

Open Dialogue Platforms: Create spaces for discussing AI challenges and opportunities. Monthly roundtables or online forums can enable educators to share their experiences with AI, discuss concerns, and brainstorm solutions.

-

Privacy-Conscious Practices: Develop student data protection protocols. Offer definitive guidelines on what student information can be provided to AI platforms and implement mandatory sessions that explain how to avoid accidental data exposure when providing prompts to AI tools.

-

Critical Evaluation Skills: Empower educators to assess AI effectiveness for themselves by guiding them on how to review AI-generated reports for accuracy and relevance. Also, help them compare multiple AI solutions. This can help build trust in AI tools and increase adoption.

Looking for practical ideas, strategies, and tools to empower educators to use AI securely and effectively?

Join us at AIM FOR IMPACT, a Panorama summit for school and district leaders who want to harness the power of AI and build future-ready schools to prepare students for tomorrow.

How to Safeguard Student Data in AI-Enhanced Educational Environments

Student data privacy is a top priority for schools and districts, but varying state-level requirements often complicate the adoption of standardized AI guidelines. As of now, only 22 states have issued AI education guidelines, leaving many schools to develop their own policies. While this approach offers flexibility, it can also lead to gaps in oversight and security.

To ensure the protection of student data and compliance with relevant laws and regulations, schools and districts should adopt these best practices:

-

Establish Clear Data Governance Policies: Schools should implement robust policies that define how student data is collected, stored, and used. These policies should align with federal laws like FERPA (Family Educational Rights and Privacy Act) and state-specific regulations.

-

Conduct Regular Risk Assessments: Periodic audits of AI tools and platforms can help identify vulnerabilities and ensure compliance with security standards.

-

Invest in Staff Training: Educators and administrators should be trained to understand the implications of AI usage, including recognizing potential risks and following established security protocols (such as checking AI responses for inaccuracies or sensitive student data before sharing).

-

Limit Data Collection: Adopt a "minimum necessary data" approach, collecting only the information required to achieve educational outcomes, thus reducing the risk of sensitive data exposure (such as attaching student information only as and when needed).

-

Partner with Trusted Vendors: Work with technology providers that demonstrate strong commitments to data privacy and security, including adherence to industry certifications such as SOC 2.

-

Engage Stakeholders in Policy Development: Involve parents, students, and educators in creating AI guidelines to ensure they are comprehensive and address community concerns.

How Does Panorama Solara Address AI Security Concerns?

Teachers are stretched thin, with 84% reporting that their time is consumed by administrative tasks. AI offers a solution by streamlining tasks like lesson planning and engagement analysis, freeing up valuable time for educators to focus on what matters most: supporting their students.

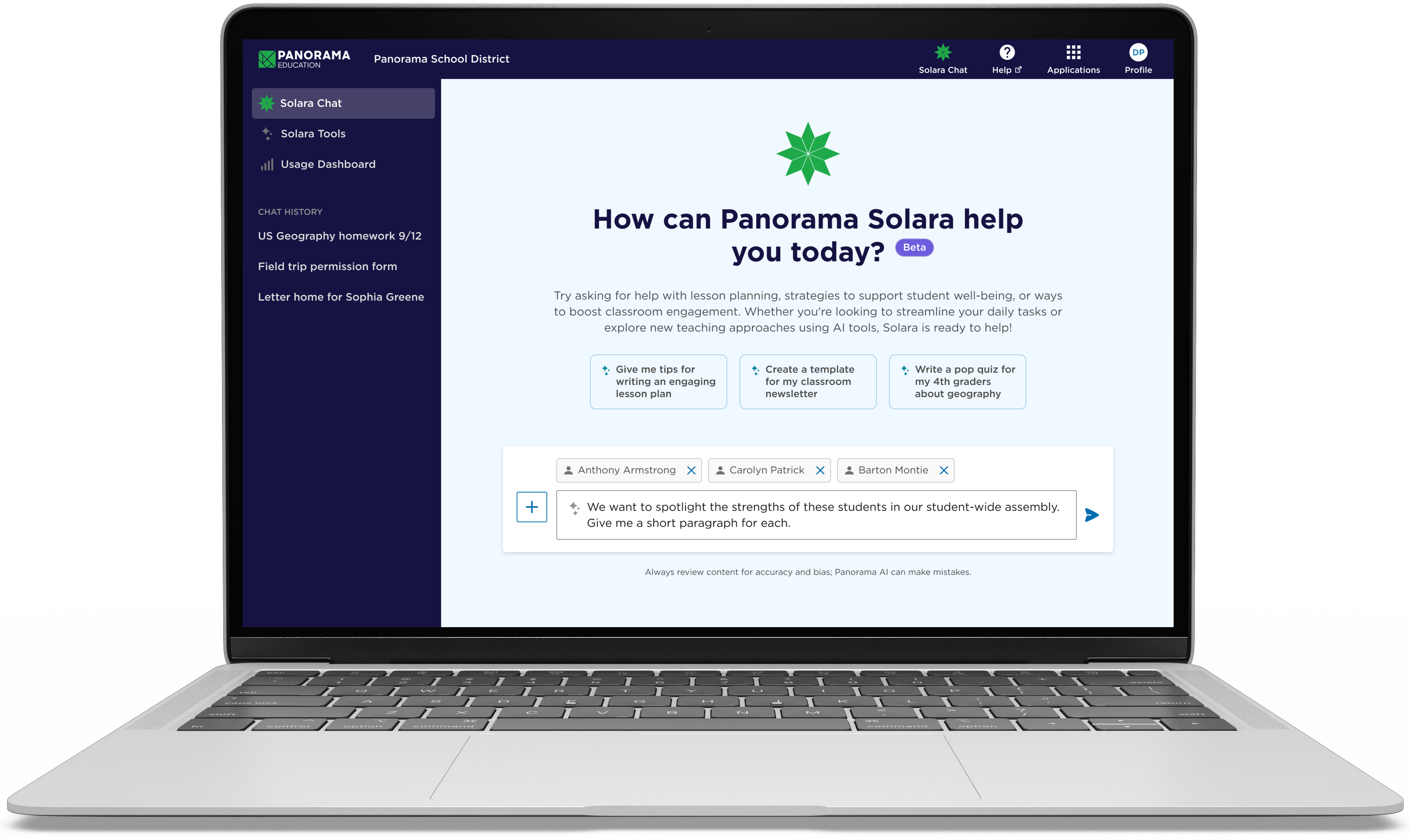

Panorama Solara is a leading customizable, district-wide AI platform that streamlines student support while ensuring robust data protection and privacy. Tailored to support your district’s goals and practices, Panorama Solara fits seamlessly into your district processes, so it works the way you do.

Here’s why academic leaders trust Panorama Solara AI in their schools and districts:

-

Privacy-First Infrastructure: Data is stored securely and never leaves Panorama's platform. Comprehensive security and privacy policies ensure confidentiality consistent with school and district needs.

-

No External Model Training: Panorama Solara ensures input data is never used to train third-party AI models, protecting sensitive information.

-

Guardrails for Education: Solara is designed to provide text-only responses on educational topics, ensuring secure interactions and minimizing the risk of manipulation or misuse through misleading prompts.

-

Data-driven Answers: While human judgment is essential for verifying answers, Solara enhances decision-making by integrating a robust collection of evidence-based student intervention strategies and, when appropriate, providing actionable insights about individual students.

-

Compliance with Standards: Solara complies with SOC 2 data security and governance standards and the Family Educational Rights and Privacy Act (FERPA).

-

Data Encryption: To protect information from unauthorized access, Solara employs encryption protocols for data in transit and at rest.

-

Role-Based Access Controls: The system limits access to sensitive information based on user roles. Teachers, administrators, and district leaders only see the data they need, reducing the risk of misuse. If an educator decides to share a Solara conversation, it is only accessible by authenticated district users.

-

Audit Trails for Transparency: Solara maintains comprehensive logs of all platform activities to meet governance and audit needs.

-

Testing & Monitoring: Periodic testing and examination by our teams (including adversarial tests) ensures Solara remains accurate, safe, and aligned with educational goals.

-

Professional Development: Panorama's training focuses on developing a common understanding of AI, use cases for AI in K-12, and considerations for safe and ethical use.

With Panorama Solara, educators can access a secure chat interface with education best practices and district-specific customizations. Using pre-built prompts, they can create personalized student supports—like intervention plans and family letters—faster and more efficiently.

For administrators, Panorama Solara offers full visibility into usage trends, ensuring that AI activity remains private, secure, and aligned with district standards. It's the essential platform for safe and effective AI in today’s schools and districts.

-1.png)