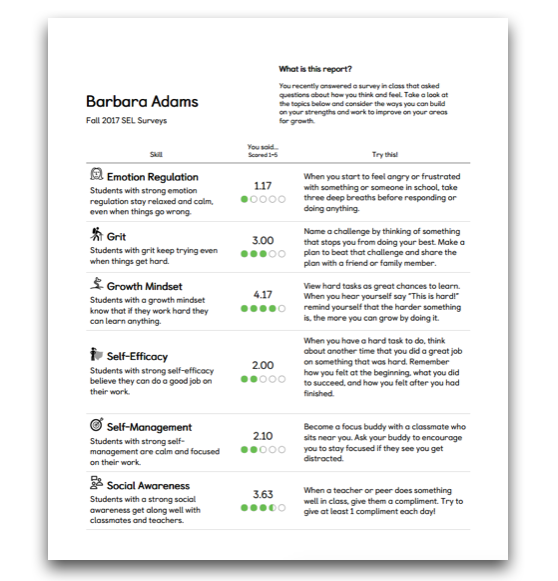

School districts want data they can trust when it comes to student voice. That starts with survey measures that meet research standards of reliability and validity, reflecting that the survey topics are sound.

In an analysis of our student survey topics, the Panorama research team made use of data from approximately 3,500,000 student survey takers in more than 5,900 schools to determine the reliability and validity of our measures.

Learn about the three hallmarks of a high-quality, research-backed student survey.

The updated report covers all of the topics on our student surveys—including those that measure students’ perceptions of themselves, their school, classrooms, and teachers. So what does the report show in terms of reliability and validity?

1. Almost all students answered all of the questions in a topic, effectively eliminating the issue of non-response bias.

On average, 95.7 percent of students answered every single question in a given topic. Even the topic with the lowest full-completion rate, Self-Management, still had 93.3 percent of respondents completing every question, leaving only 6.7 percent of respondents skipping one or more questions.

|

Why Does This Matter? When a sizable portion of students skip questions, we cannot be sure that the responses we do have represent all students. This problem in survey research is called non-response bias (Holt & Elliot, 1991). But with the high response rates we're reporting, we have evidence that for our survey results, non-response bias is a non-issue. |

2. Our survey topics exhibit strong reliability and structural validity.

We can think of reliability as how well the questions making up a topic "hang together" (DeVellis, 2016; Streiner, 2003). Relatedly, structural validity reflects whether each topic measures only a single construct and not multiple constructs (Messick, 1995a). Looking at some of the questions making up Emotion Regulation—a topic that was in the middle of the pack on reliability and structural validity—helps us make intuitive sense of this finding. Questions include:

- "How often are you able to pull yourself out of a bad mood?"

- "Once you get upset, how often can you get yourself to relax?"

Both of these questions appear to be driving at the same construct, and that's what our data revealed as well.

|

Why Does This Matter? The statistics that reflect reliability and structural validity showed that our topics are strong on both, suggesting that the questions that make up a topic really do belong together as a single topic. |

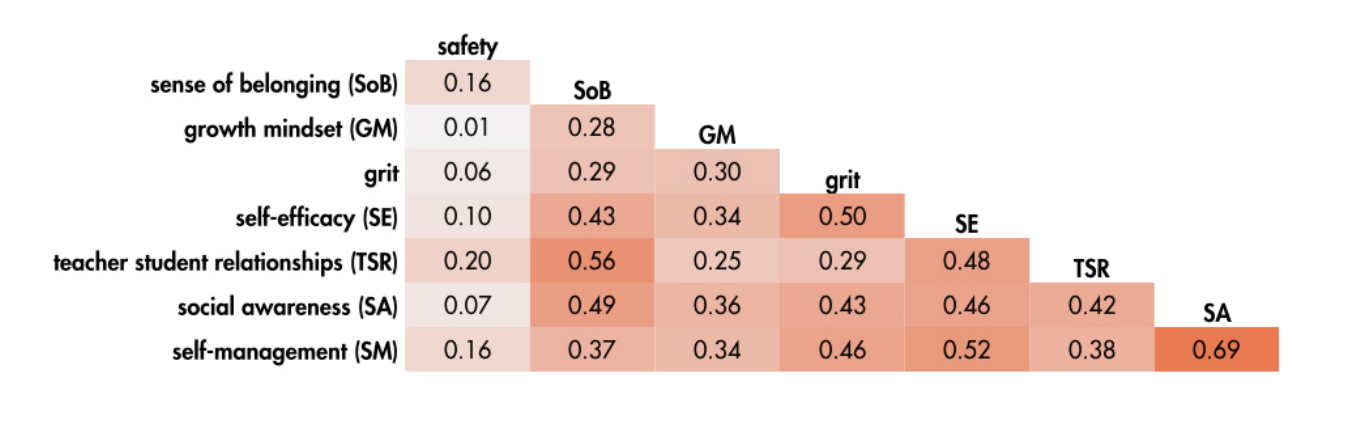

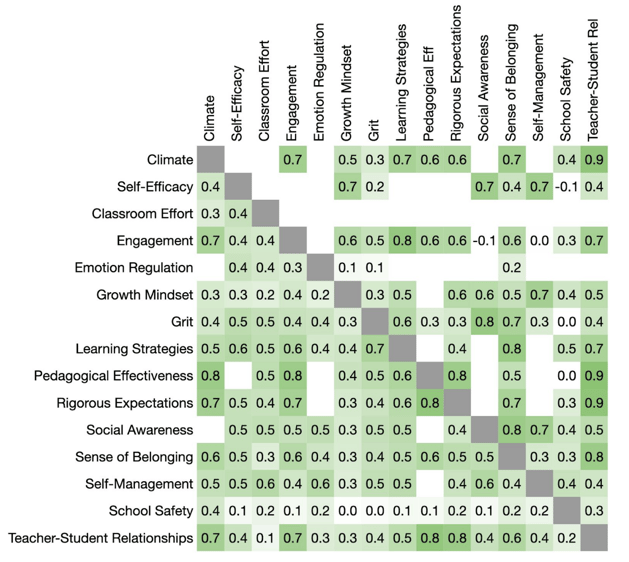

3. Our topics show both convergent and discriminant validity.

By that, we mean that our topics show higher correlations with theoretically related constructs and lower correlations with theoretically unrelated constructs. For example, prior research led us to expect that Teacher-Student Relationships, Rigorous Expectations, Sense of Belonging, and Climate would be relatively more related to each other (Anderman, 2003; Brinkworth, McIntyre, Juraschek, & Gehlbach, 2018; Lee, 2012). That is exactly what we found in our analyses, as you can see in the visual below.

Topic Intercorrelations

Note: We calculated Spearman rank-order correlations to minimize measurement assumptions (see Chen & Popovich, 2002). Values above the diagonal are school-level correlations (between school-level mean topic scores), and numbers below the diagonal are student-level correlations. Blank cells indicate topic pairs with insufficiently sized samples (fewer than 20 schools or 500 students).

Note: We calculated Spearman rank-order correlations to minimize measurement assumptions (see Chen & Popovich, 2002). Values above the diagonal are school-level correlations (between school-level mean topic scores), and numbers below the diagonal are student-level correlations. Blank cells indicate topic pairs with insufficiently sized samples (fewer than 20 schools or 500 students).

|

Why Does This Matter? These patterns of correlations indicate that our topics measure what they aim to measure. |

We've just given an overview of reliability and validity of our student survey topics, but we want to point out that the reliability and validity of a survey depend on how it is used (Messick, 1995b). It's important to keep in mind who the survey was designed for and to create the conditions at each school that will give rise to honest responses from students.

Approached in this way, Panorama's surveys offer schools and districts a research-backed means for measuring the variables that undergird child development and academic growth.

You can view our full 2025 reliability and validity report here.